How to Preserve

Introduction to the Genetic Archive

by Michael Friend

2021-09-08

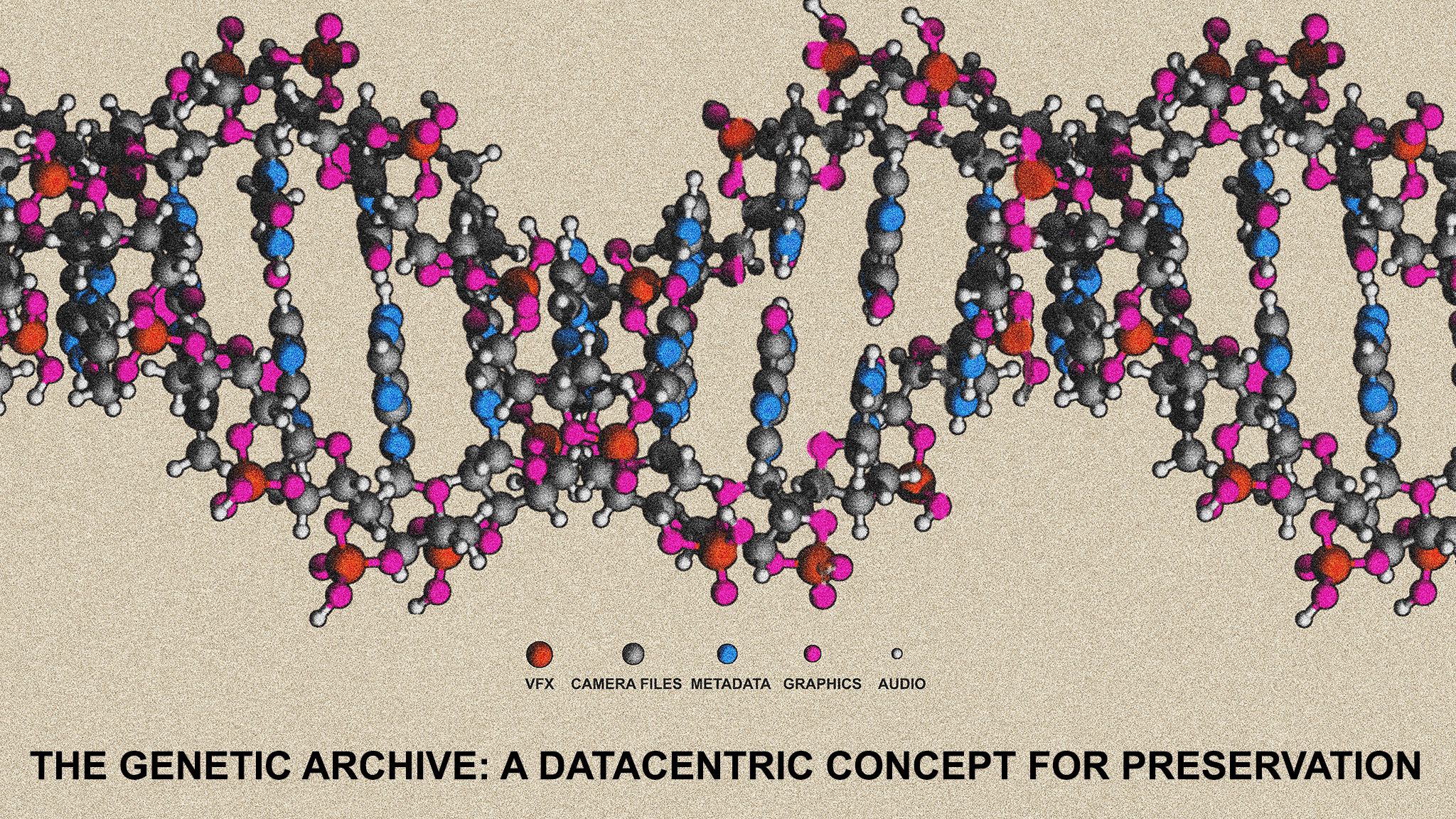

This essay presents a proposal for a future approach to motion picture archiving, referred to as the genetic archive. The fundamental principle is that it is not necessary (nor desirable) to keep every version of every element created in the process of shooting, posting, and distributing a work; instead, archive only the minimal, original, source elements necessary to reproduce it, with the metadata and recipes that are necessary and sufficient to assemble all valid versions. The term “genetic” archive is used because the critical source elements that are essential for the creation of viewable and distributable masters may be considered the “DNA” of the work, and the various versions derived for distribution and mastering are the “children” of this “parental” material at the work’s core.

We take as axiomatic that media works will need to be indefinitely remastered and optimized for new technical contexts, while maintaining the creative vision of the originators. We also believe that the most primordial images – camera files and VFX – are the unique and superior data resource for all future applications. We have seen improvements in extraction and debayering algorithms, and we can expect improvements in future images from the archive to be based on the advance of algorithmic protocols. We also take the “work” as axiomatic. This is the notion that defines the archive as the components necessary to make the valid versions of a show. We do not consider the excess data valuable – there may be some stock footage or other functionality that we have to account for; for example, we would have our stock footage library extract any neutral material from camera files. But basically, the idea that a work is infinitely expansible as new editorial versions is incorrect. There will be a very few editorial versions of the work, which will be perennially reconfigurable by the archive for future technical contexts. There will always be exceptions to this practice in terms of super-productions or idiosyncratic works. Documentaries have some special attributes, and currently, the VFX material is starting to displace simple camera files in ways that will change the organization of the deep archive.

The first driver for this kind of archive is deduplication on two axes. Currently, major productions produce gigantic archives of camera files and dailies. Over 90% of this data is useless and irrelevant, because we do not normally go back and use the alternate takes that comprise so much of the archive. The notion of the “work” is that the archive contains only the materials required to make any valid version of the show. Modern practice with respect to director's cuts, extended versions, etc. means that we will not have to go back into the unused camera files to make any of the valid versions of the show. So redundancy abatement #1 is the reduction of camera data by using the EDLs to isolate and archive only the files used in a valid version. The second abatement is along the temporal axis. Today, for an archive, we create a DI or CTM, a DSM or VAM, and frequently a DCDM and HDRDM. These are all excellent elements, but they are individually very expensive and need to be migrated or stored in a cloud. And yet, in the future, these elements will not be used. They are largely artifacts of today's preferred distribution and display modes. So instead of carrying this data volume perennially, we want to archive just those primordial files that are the source of the production (mainly the used camera files with handles and the VFX with layers) and the instructions. This frees the archive from the perennial cost of holding all these full-weight versions.

The second driver is organization. Today, we take in this mass of material with very little documentation or strong organization. We need to capture more of the process metadata that allows us to organize and document the full deep archive (this is clearly an opportunity for IMF). Organization will save time and money in remastering. The reduction of data we talked about above is useful to this process because it eliminates the need to track and document useless data, and makes the path to remastering much clearer. To be clear, it seems likely that future iterations of the product will be created using things like EDLs, CDLs, “comparison engine” software and AI for matching data into a new technical space. In other words, while we want to collect process metadata and some of the components of the workflow, we think that eventually, these will be superseded by modern data management tools.

We foresee the capture of the full production archive, and the reduction and organization of that data into a genetic archive of the work.

What is in the genetic archive? It is the aggregate of original data files used in the production. The production archive is the vast amount of heterogenous data created in the course of the production. The genetic archive is the organization of the critical and most primordial forms of that data for redeployment in the future.

Unlike the traditional archive, which is normally a collection of finished elements, the genetic archive is a set of resources which parent all valid versions of a show, and the instructions to finish these resources as valid versions. This is similar in some ways to an IMF, which is a collection of resources organized to produce any desired version. These variations are assembled by playlists. A genetic archive would be organized as playlists – one for each version (theatrical, home video, extended version). The genetic archive is not organized to localize the versions – localization is normally handled by the IMF. But the playlists of a genetic archive would assemble the DI, the DSM, the HDRDM, the DCDM, and would not only parent new or modified IMFs as necessary, but also any future ‘technical versions’ required. For example, the genetic archive would be the highest quality source for the creation of new elements – a super-HDR version, an 8k version, an HFR/HDR hybrid – whatever technical variations may be called for in future contexts.

The genetic archive is organized out of the massive body of production material that constitutes the ”production archive,” the primordial elements used to make the show:

- Camera files: charts and tests, all cameras, dailies. From the totality of camera files, the genetic archive would select and preserve only the used shots. This typically includes multiple units, cameras and file types.

- VFX: the genetic archive for VFX would consist of the final elements and all the plates, composites, mattes, and layers as uncomposited elements. This is in order to be able to adjust the VFX in remastering.

- Other visual data: Includes opticals and other visual editing elements used to make finished versions of the product, as well as stock footage and titling. These elements may have different levels of quality, but they are the ‘most original’ state of what was used in the show. Opticals are composite units built to support specific effects in imagery in addition to VFX. Stock footage is generally externally acquired, and so quality is dependent on what the production accessed. In the case of documentary stock footage, this may be distressed archival footage derived from many sources, standards, and levels of quality. As far as titling, this includes both text and image, and is normally a layer or alpha-channel resource that applies text to picture. Titling also includes art titles that may exist as a standalone resource like a finished effect, but wherever possible the separate components of the title build should be maintained.

(These elements described in the three sections above constitute the entire source for the image of a given product.)

- Audio: the ‘collated archive,’ all production data and conformed audio, from earliest stems to final mixes. The retention of the audio components as individual items allows full access to the mix in remastering. Also, since audio data is lightweight and is normally delivered in a ProTools matrix, it is relatively economical to organize and archive.

- Metadata: EDLs, CDLs, Baselight (Resolve, etc. and other DLs (framing, etc.); color correction (DI suite) data re-coloring, windowing and local adjustment, cropping, and matting; HDR data, other processing data (i.e., “Dolby Vision”); ASC CDL metadata. Increasingly the modern production workflow is guided by metadata of many different types. The most primary metadata is simple descriptive information. But there is a tremendous amount of process metadata that describes the changes – in editing, color, shape, texture, and look of the work.

- Editorial drives (mainly Avid or other drives that contain the developmental history of the product and instructions on how data was processed). The editorial drives contain the assembly history of the product, and contain the process metadata specific to the various versions of the film. This includes the organization of the various valid versions of the work as well as para-data – gag reels, screen tests, alternate scenes, etc.

These are the data classes that are produced by the production, and a targeted subset of these resources becomes the genetic archive.

Many resources are important to retain in a modern media enterprise, but not all these resources are archival, and not all of these resources are equally important in an archival context. For example, a DSM or DI is more important than a DCP or a ProRes proxy. In restoration, an SRC may very well be more important than a more processed IPDC. This is because the former types of data are richer and can support a much wider variety of processing and remastering activities. There is a hierarchy of values implicit in the traditional archive. The most original data (the cut original camera negative) is at its apex. From the perspective of the genetic archive, the more primordial files have precedence over derivative elements. Generally speaking, the distribution class of materials is secondary – members of that class can be recreated from primal archive elements or assets (that is, if all localization assets are lost, new localization material can be created or recreated from the primary archive; but if original camera files are lost, that level of data is lost and irreplaceable).

The reality of the workflow in the motion imaging business, which starts with the most original data and systematically destroys data in a deliberate process to create a highly specific viewing experience, is at the core of this principle. The process of replacing an element or creating an element for a new technical context, each time it is performed, is a ‘downhill’ process through which data is permanently lost in the derivative element (the replaced or new master). This process may be performed multiple times in order to modulate data for different target environments, but the process is dependent upon the availability of the primordial, super-abundant data resource. This is not to suggest this data is the only locus of value in the archive, which also retains the instructions used to create each version of a product, and reference data that can be used to support remastering.

The idea of a genetic archive is to maintain a resource of the minimal elements necessary to reproduce the work, and the instruction sets necessary to assemble all valid versions. One of the objectives of the genetic archive is to collect all process metadata and instructions to be able to reproduce any piece of the workflow chain between primordial camera file and final picture data ready to go to distribution. It's unlikely that these metadata instructions will be useful for the future, as we will likely use AI to conform, reprocess and re-grade new versions of a work in new technical environments rather than replicating the arcane workflow of the original production. Nevertheless, the metadata and editorial drives that contain the record of these instructions are important resources for the transitional state of archives. They usually contain information that can support and improve the process of remastering, regardless of whether that is done by methods close to the original production workflow, or whether the process is executed using AI or machine learning. Similarly, the organization of primordial material need not follow EDLs if there is a ‘comparison engine' that can sort out the primordial data for re-mastering, but it will be helpful to have correct and comprehensive EDLs in the archive. Even if the sets of instructions are not re-used, they may contain important technical data, and since the instructions are comparatively small amounts of data, they should be retained in the archive. It is important to understand the process of remastering not as emulation of the original post-production workflow that created the final master, but as a process performed on the primordial data set in order to match the final visual values of the reference in the original and subsequent technical contexts.

Over time, new technical requirements for masters will have to be accommodated. Each time a new version is added by the remastering team, a new instruction set is added to the archive. One of the most challenging aspects of this process is the QC phase, where the new master is recreated from the new instructions. Once this ability to recreate a version is confirmed, it is not necessary to retain a physical iteration of the data, and the on-going work of deduplication is sustained. This process suggests the importance of having stable libraries of data at the root of the process. The genetic archive is open with respect to software. Better debayering, grain reduction, or up-resing algorithms which may become available can be imposed on the primordial data to meet processing requirements for new display environments. Because there is no point to emulating the original process, improvements can be made in that arc from code values to screen.

The genetic archive is a method for addressing some of the current problems of digital archiving for moving images. It is not a solution to every archive situation, nor does it engage with future forms of production which might produce a different type of data. But it does apply to the last twenty years of mainstream motion pictures, as well as the data that will be captured from legacy film archives.

I really like the idea of the “genetic archive” in regards to culling down the parts of the production archive that is important. Your article reminded me that we do need to get that industry glossary going since I was unfamiliar with a few of the acronyms you used. I’m on board with this genetic archive concept - I think the real work will be defining how deep you go to find the component assets that make up say, a VFX shot - what about things that are difficult to re-purpose like rigging for example? Or is the “flattened” CG animation good enough as the component asset? Either way, I’m looking forward to the discussion on this topic! Thank you for taking the time to write this, Michael!

One last comment - shouldn’t this be in the “What to Preserve” pillar?

Hi Ms. Chang,

Thanks for these words. I think the genetic archive could be in “what to preserve” or “how to preserve,” because we are suggesting changes in both the data and the workflow. The comment you make about vfx is prescient, and no we are not satisfied with the simple “flattened components” of vfx or of animation. We have specified collection of vfx layers, and we been trying to identify upstream resources that allow us to do a better job – a more data-centric job – of capturing animation data. I hope the animation question will be a part of our on-going conversations. And these current concerns suggest what some of our near-future issues will be. In an era of virtual production characterized by libraries and templates, archives of moving image works will have to accommodate references – “pointers” – to libraries and unrendered resources and possibly to software configurations. New forms of visual effects and volumetric production (already manifest in games) will present challenges beyond the genetic archive. So often, archives have sought solutions in the past. The techniques of the genetic archive are not yet developed, and we need to evolve the genetic archive as a configuration for the present regime of production. But it’s not too soon to start specifying the next archive, based on virtual production and resources in the cloud. Because technology doesn’t wait for the archives!

I’m excited to dive into the concept of the genetic archive and how it could be implemented for real! I do like your idea of saving VFX in layers - EXR has that capability, so perhaps a more standardized way to do that would work.

There is an issue at the heart of the genetic archive proposal that requires some foresight and planning. That is the conception of how the primordial data resources of the archive will be used to fulfil future needs. At the heart of the genetic archive is the notion that the most original files (i.e., camera files) provide the purest version of the data used to create a show. These primordial resources are regarded in the same way as an original negative in film. Over many decades, we learned to appreciate the superabundant data resource that was the original negative, and the evolving technology (film chains, telecines, scanners) that were able to extract more and more data from these negatives. In our data-centric archive, we do not expect better machines, but we anticipate the development of algorithmic approaches to extraction of data from camera files that is of higher quality and also that will be more commensurate with an evolved media environment and its requirements. This is why we do not support the transcoding of camera files to an “archival” format. These formats raise the prospect of truncating the original data scheme of the camera files, and could preclude the further development of superior methods of extraction. This is of course a point to be technically argued.

To return to the contents of the genetic archive, the proposal calls for the capture of the editorial drives and the process metadata used in the workflow between primordial files and the final data object. In the first instance, this should give remastering teams all the data necessary to rebuild any stage of the project (for instance, the DI, the VAM or more specific distribution-facing forms such as the DCDM or IMF. This knowledge chain is important at the point that a production is completed because it allows for the virtualization of any downstream products, thus making the distribution tier much more economical. But it is not necessarily the long-term method for obtaining new forms of the show. In other words, the ultimate goal of the genetic archive is not to emulate the entire production workflow in order to obtain these new forms. We expect that with the primordial files and the delta represented by the final, finished show (the DI, for example), an AI or ML type software device will be able to extract and extrapolate a new form of the show (say in a new color space, at a new resolution or dynamic range) for a future technical environment. David Suggs in a note to us suggested the importance of retaining an archival copy of the final, finished show as the base-level model for future iterations and products. This is an extremely important part of the genetic archive, and will be necessary until such time as we have sufficiently virtualized that element.

As the genetic archive is developed, we need to understand what information needs to be retained, and how to harvest that information from the editorial drives. Doing so will allow the archive to remove the full editorial drive from the genetic archive, thus enacting the data triage that will allow the genetic archive to be more economical. In the initial stages of the genetic archive, it will be important to retain the full editorial drive data as a map to the workflow that created the original product.